Chatbot with BABI dataset from Facebook research

Chatbot by using the babi dataset released by Facebook research and there’s a link below.

The type of data we’re going to be working with has three main components. It has a story component.

- Story

- Question

- Answer (Yes/No)

Here is an example.

Please check the following paper called “End-to-End Memory Networks” End-to-End Memory Networks

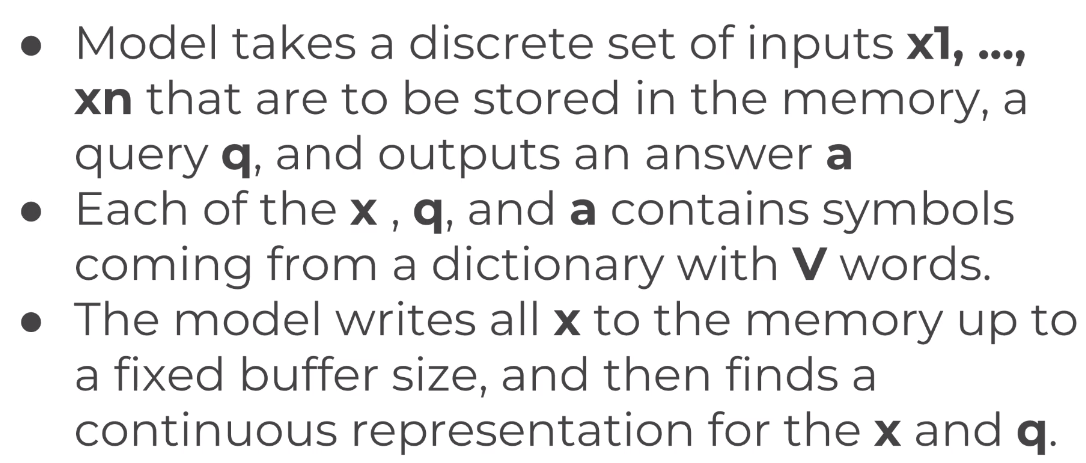

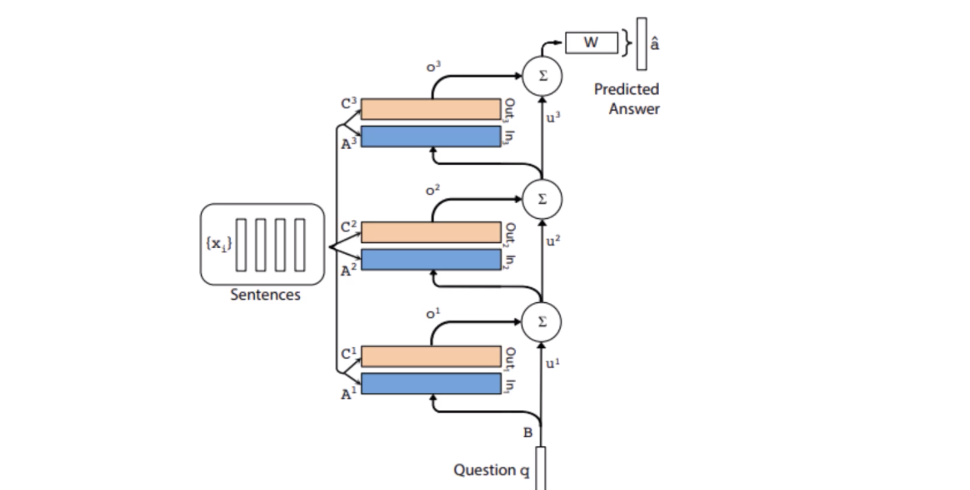

Overall of the model :

This is how I created the models

- Input Memory Representation

- Output Memory Representation

- Generating Final Prediction Eventually, created a RNN model with multiple layers

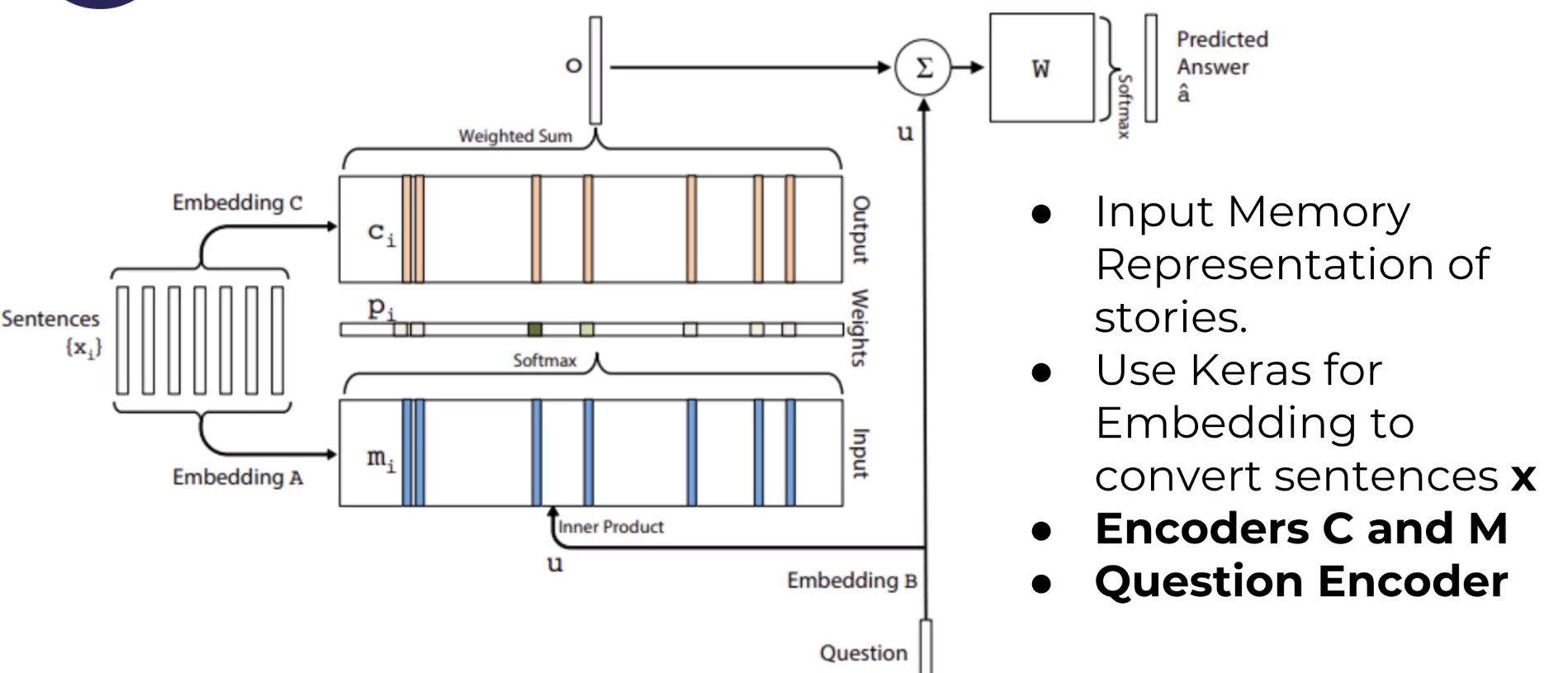

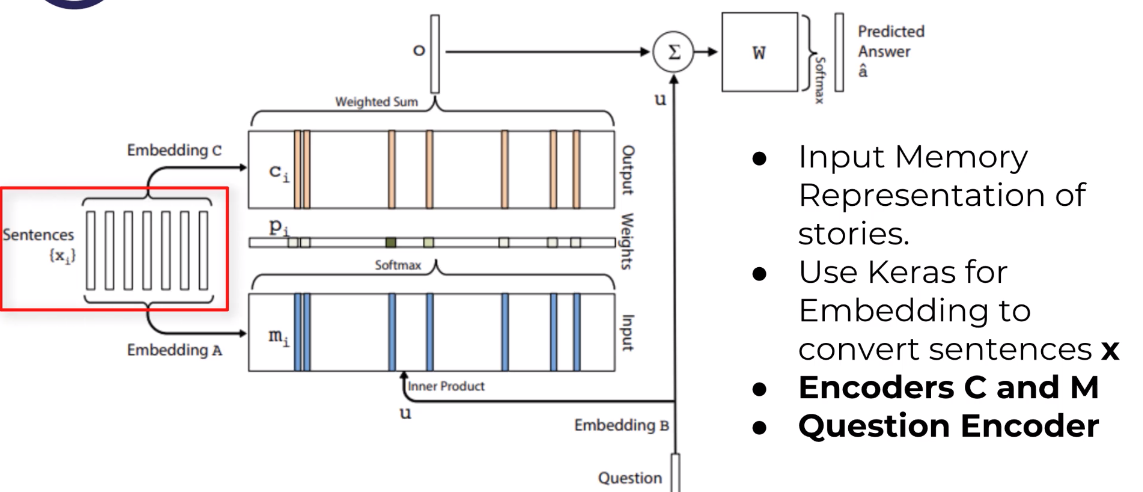

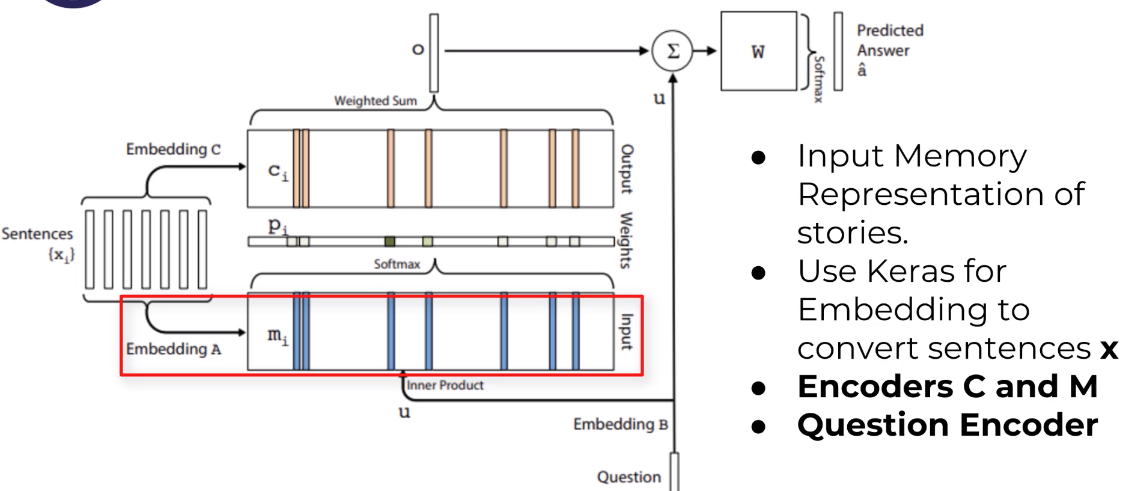

This is how the single layer looks like.

-

The first key component of the single layer is the input memory representation.The first thin we will going to receive is an input set of x1,x2,x3 etc..Those are the sentences or stories to be stored in memory.

-

We’re going to convert that entire set of Xs into memory vectors. So those memory vectors we’re gonna call mi.

-

We’ll use cares for embedding this to convert sentences and then we later on have another embedding an encoder process for C sub AI and we’ll also be getting that question or query calling Q and that’s also going to be embedded so we’ll embed that to obtain an internal state which you will

-

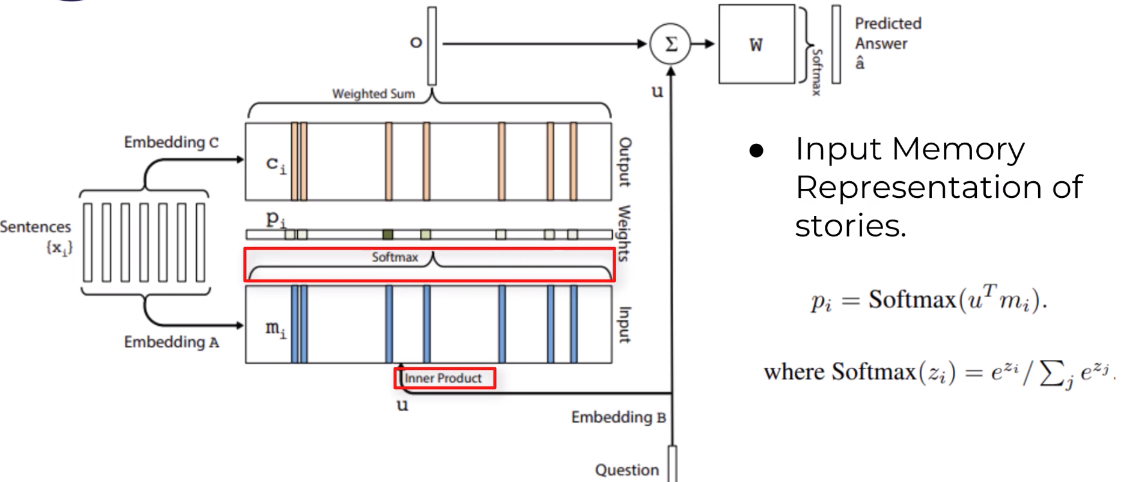

Eventually, we’re going to compute the match between you and memory M sub AI by taking the inner product followed by a soft Max operation within this single layer so that will look something like this.

-

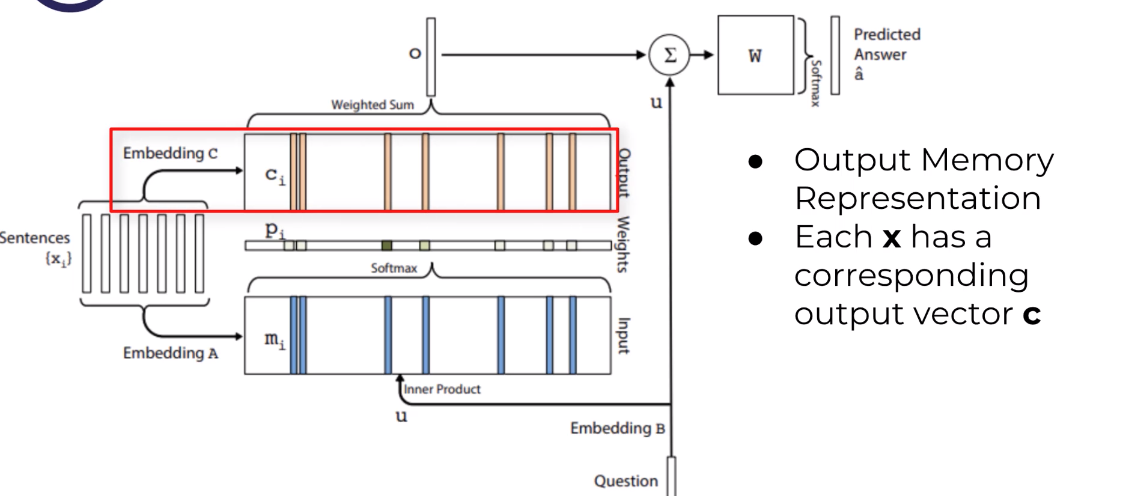

This is all going to input memory representation. output memory representation each x AI has a corresponding OutPut vector C sub AI and this is given in the simplest case by another embedding matrix which we’ll just call C.

-

The response vector from the memory O is then a sum over the transform inputs C sub I waited by the probability vector for the input and here’s the equation 0 is equal to the sum of PMI times see Evi and because the function from input output is smooth we can then compute gradients and back propagate through this.

-

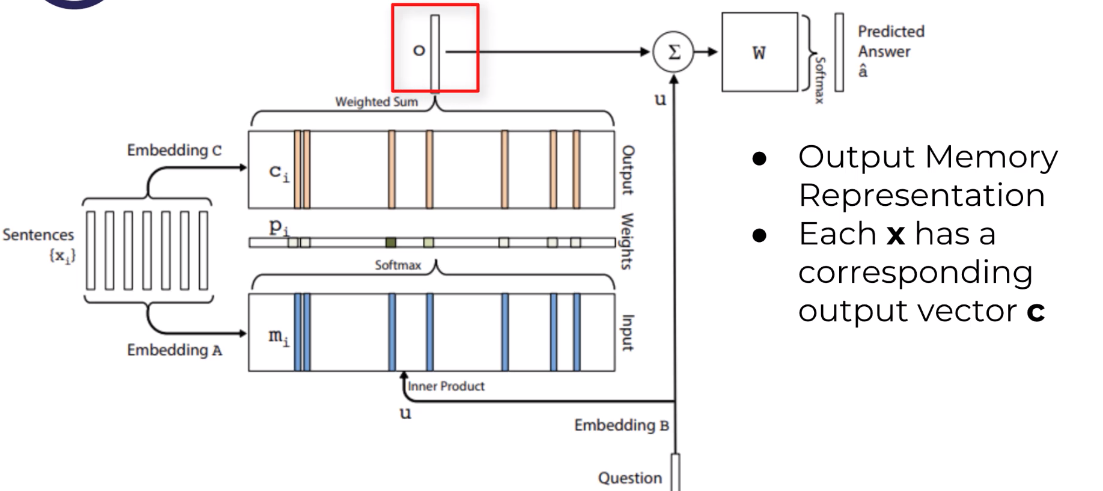

The final third step is generating a single final prediction. In this step, the sum of the output vector 0 and the input embedding U is passed through a final weight matrix that will label a capital W here and then a soft Max is used to produce the predicted label.

This is how the multiple layers look like.

Please check my repository at the following link. Chatbot_github

I also want to thank Jose and periandata for clear explanation. Some of the picture taken by his presentation. Recommend his course at the following link. nlp-with-python

Have fun !